The eigenvector with the highest eigenvalue is, therefore, the first principal component. Simply put, an eigenvector is a direction, such as "vertical" or "45 degrees", while an eigenvalue is a number telling you how much variance there is in the data in that direction. Just like many things in life, eigenvectors, and eigenvalues come in pairs: every eigenvector has a corresponding eigenvalue. Adding additional components makes your estimate of the total dataset more accurate, but also more unwieldy. As you add more principal components, you summarize more and more of the original dataset. Where your initial variables are strongly correlated with one another, you will be able to approximate most of the complexity in your dataset with just a few principal components. Each principal component sums up a certain percentage of the total variation in the dataset. Where many variables correlate with one another, they will all contribute strongly to the same principal component. In this way, you transform a set of x correlated variables over y samples to a set of p uncorrelated principal components over the same samples. This linear transformation fits this dataset to a new coordinate system in such a way that the most significant variance is found on the first coordinate, and each subsequent coordinate is orthogonal to the last and has a lesser variance. PCA is a type of linear transformation on a given data set that has values for a certain number of variables (coordinates) for a certain amount of spaces. This is the first principal component, the straight line that shows the most substantial variance in the data. This means that we try to find the straight line that best spreads the data out when it is projected along it. They are the directions where there is the most variance, the directions where the data is most spread out. The mathematics underlying it are somewhat complex, so I won't go into too much detail, but the basics of PCA are as follows: you take a dataset with many variables, and you simplify that dataset by turning your original variables into a smaller number of "Principal Components".īut what are these exactly? Principal Components are the underlying structure in the data.

This can enable us to identify groups of samples that are similar and work out which variables make one group different from another. PCA allows you to see the overall "shape" of the data, identifying which samples are similar to one another and which are very different. Well, in such cases, where many variables are present, you cannot easily plot the data in its raw format, making it difficult to get a sense of the trends present within. You'll also see how you can add a new sample to your plot and you'll end up projecting a new sample onto the original PCA.Īs you already read in the introduction, PCA is particularly handy when you're working with "wide" data sets.Of course, you want your visualizations to be as customized as possible, and that's why you'll also cover some ways of doing additional customizations to your plots!.

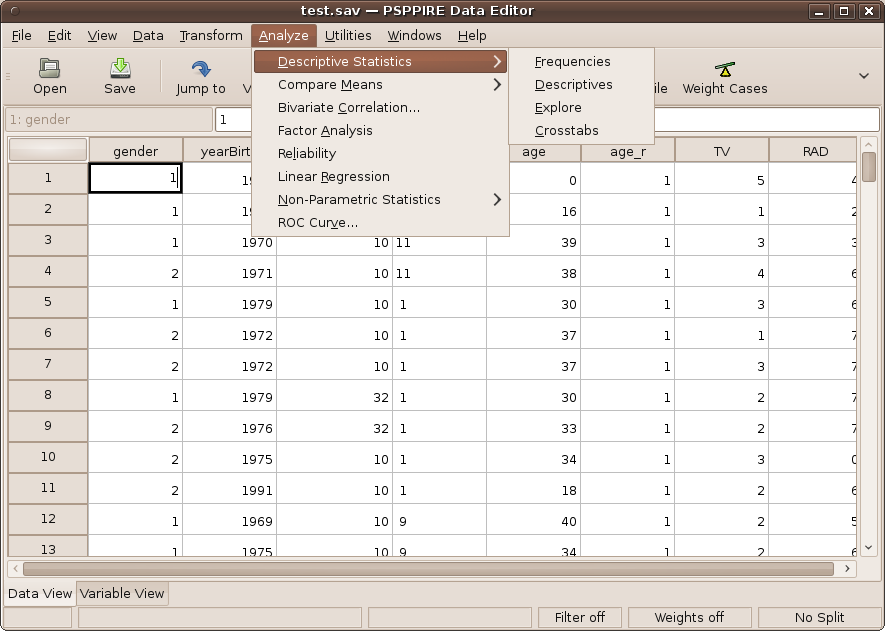

PSPP PRINCIPAL COMPONENT ANALYSIS HOW TO

0 kommentar(er)

0 kommentar(er)